List Crawling 2025: Master Easy Data

Why List Crawling is Essential for Modern Data Collection

List crawling is a specialized form of web scraping that focuses on extracting structured data from web pages containing lists or collections of similar items. Unlike general web scraping that might target diverse information across a website, list crawling specifically targets repetitive data patterns like product catalogs, business directories, or search results.

Quick Answer: What is List Crawling?

- Purpose: Extract structured data from web-based lists automatically

- Focus: Product listings, directories, search results, tables, and articles

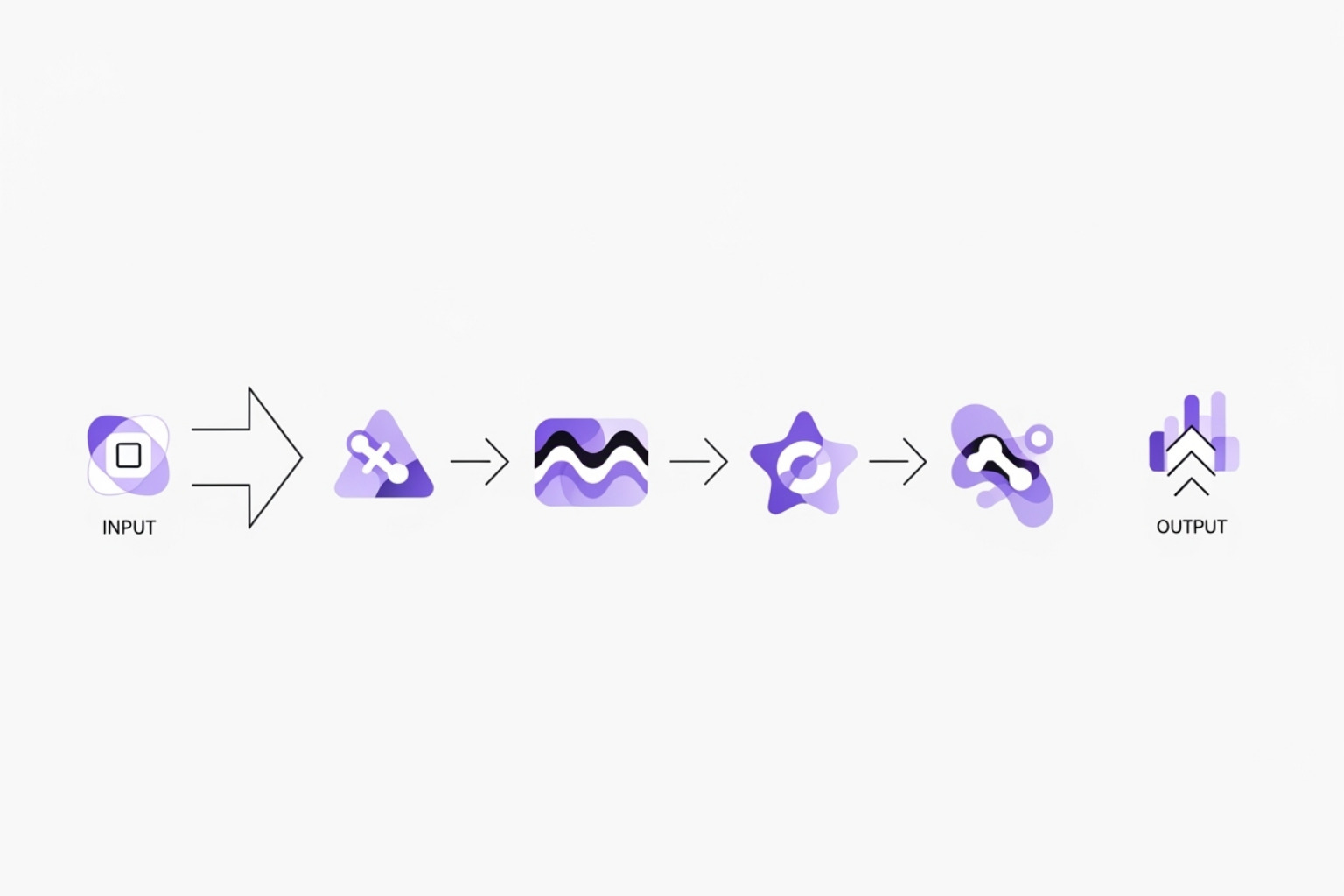

- Process: Identify target lists → Configure crawler → Extract data → Clean and export

- Benefits: Saves time, improves accuracy, reduces manual work

- Common formats: CSV, JSON, Excel for easy analysis

The modern digital world treats information as “the new gold,” and businesses rely heavily on online data to make smart decisions. Whether you’re tracking competitor prices on e-commerce sites, building sales leads from business directories, or gathering market research from review sites, list crawling automates the tedious process of manually collecting this information.

This technique is particularly valuable because it can handle the web’s many list formats – from simple static pages to complex paginated content and infinite scroll layouts. The key advantage? What might take hours of manual copying and pasting can be completed in minutes with the right approach.

List crawling also plays a crucial role in SEO optimization. It helps detect broken links, analyze competitor strategies, and monitor keyword rankings across different locations. When done correctly, it boosts website performance and allows businesses to expand their data capabilities significantly.

Basic list crawling glossary:

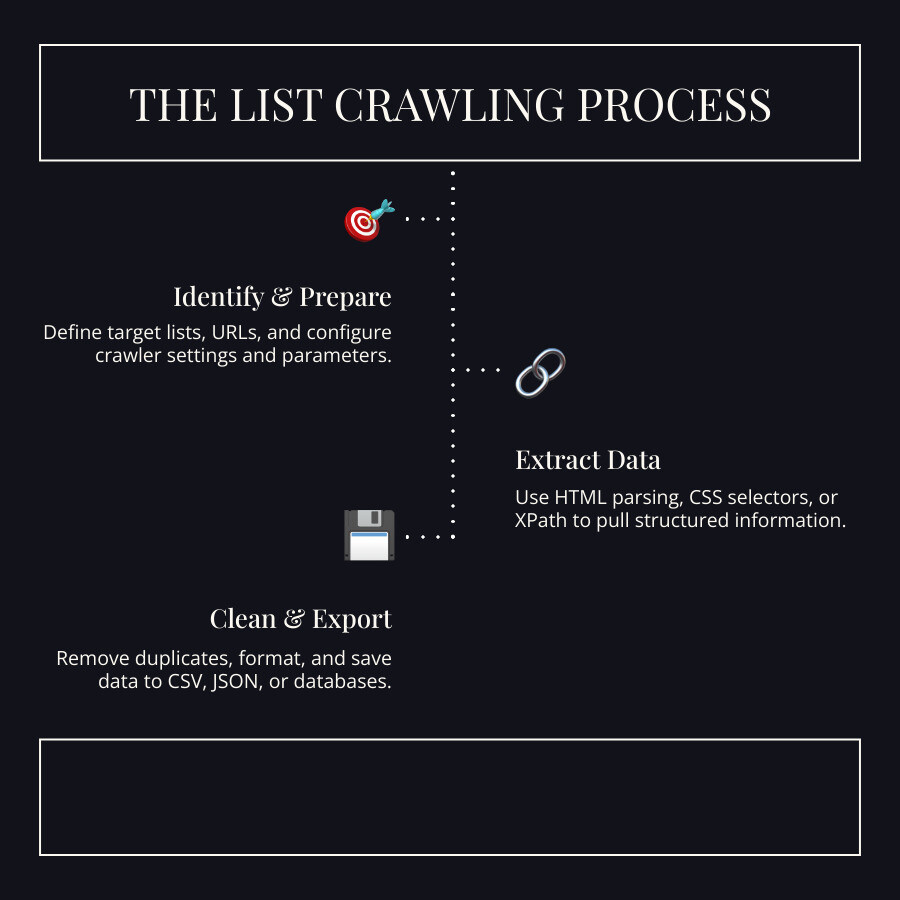

The Core Process: A Step-by-Step Guide

When you break it down, list crawling is really just a smart way to teach computers to collect organized information from websites. Think of it like having a super-efficient research assistant who never gets tired and can work through thousands of web pages in the time it takes you to grab a coffee.

The beauty of list crawling lies in its systematic approach. Instead of randomly grabbing data from websites, we follow a clear three-step process that ensures we get exactly what we need, when we need it, in a format we can actually use.

Step 1: Identify Target Lists and Define Parameters

Before we dive into the technical stuff, we need to play detective. The first step is finding websites with publicly accessible data that’s organized in structured lists. We’re looking for those golden opportunities where information appears in predictable patterns.

Maybe you’re interested in tracking car prices across different dealership websites, or perhaps you want to gather customer reviews from automotive forums. The key is spotting those repetitive data fields that follow the same format over and over again.

Once you’ve found your target, it’s time to set some ground rules. Defining scope means deciding exactly which parts of the website you’ll explore. Will you focus on specific categories? How deep will you go into the site’s structure?

Pagination is another crucial consideration. Many websites split their lists across multiple pages, and you’ll need to figure out how to steer through them all. Some sites use simple “Next” buttons, while others have numbered page links or even infinite scrolling.

Finally, you’ll want to identify the specific data points to extract from each item. If you’re crawling a car listing site, you might want the make, model, year, price, mileage, and dealer contact information. Being specific here saves you tons of time later.

Step 2: Extracting the Data

Now comes the exciting part – actually pulling the data from those web pages. This is where list crawling gets a bit technical, but don’t worry, it’s not as scary as it sounds.

Every website is built using HTML parsing code, which is like the skeleton that holds everything together. To extract data, our crawlers need to read this code and find exactly where the good stuff is hiding.

We use tools called CSS Selectors and XPath to pinpoint the exact location of our target data. Think of these as GPS coordinates for information on a web page. They help our crawler steer directly to the product titles, prices, or contact details we’re after.

Modern websites love to show off with fancy features, which means we often encounter dynamic content that loads after the page appears. This happens when websites use JavaScript rendering to populate lists or load content as you scroll. It’s like trying to photograph a moving target – you need special tools to handle it properly.

For serious list crawling projects, many professionals turn to Scrapy, a powerful framework designed specifically for this kind of work. It’s built to handle multiple requests at once, making it incredibly efficient for large-scale data collection projects.

Step 3: Cleaning and Exporting the Final Dataset

Raw data straight from websites is rarely ready to use – it’s like fresh vegetables that need washing and chopping before they go into your recipe. This final step is where we transform messy, inconsistent information into something genuinely useful.

Removing duplicates is usually our first priority. Websites often repeat information across different pages, and our crawlers can accidentally pick up the same data multiple times. Nobody wants a spreadsheet full of duplicate entries cluttering up their analysis.

Formatting correction comes next. Dates might appear as “2024-01-15” on one page and “January 15th, 2024” on another. Prices could be listed as “$25,000” or “25000 USD.” We standardize these inconsistencies so everything plays nicely together.

The final step involves filtering irrelevant content and preparing the data for storage. Sometimes our crawlers pick up extra information we don’t actually need – like navigation menus or advertisements that got caught in our data net.

When it’s time to save our cleaned data, we have several options. CSV files work great for simple spreadsheet analysis. JSON format is perfect when you need to share data between different systems. For larger projects, SQL databases provide the power to handle massive datasets and complex queries.

The goal is always the same: turning the overwhelming amount of information scattered across the web into organized, actionable insights you can actually use. For more advanced techniques on optimizing your extracted data for analysis, check out this guide on how to extract data for faster performance.

Tools and Techniques for Effective List Crawling

Choosing the right tools for your list crawling adventure is a bit like picking the perfect vehicle for a road trip. Do you need a rugged off-roader for challenging terrains (complex websites), or a sleek, easy-to-drive sedan for smooth highways (simple, structured sites)? The answer depends on your destination and your driving skills!

When it comes to list crawling, we have two main paths ahead of us: building our own custom tools with code or using ready-made software solutions. Each approach has its sweet spots, and the best choice really depends on your technical comfort level and project needs.

Common List Types and How to Crawl Them

The web serves up lists in many different flavors, and each one needs its own special approach. Think of it like cooking different dishes – you wouldn’t use the same technique for making soup and baking bread!

Paginated content is probably the most common type you’ll encounter. These are lists spread across multiple pages with those familiar “Next” or page number buttons at the bottom. The trick here is teaching your crawler to follow these links automatically, jumping from page to page until it reaches the end. Some websites get sneaky and limit how many pages you can view (usually around 20-50 pages), so we need to plan for that.

Infinite scroll lists are the modern web’s favorite child. You know the drill – scroll down, and magically, more content appears. These require a bit more muscle because simple requests won’t cut it. We need to use headless browsers that can actually simulate scrolling, waiting for new content to load before grabbing it. It’s like having a robot finger that keeps scrolling for you.

Tables and tabular data might seem straightforward since they’re nicely organized in rows and columns. The catch? Many websites create table-like layouts using fancy CSS tricks instead of actual HTML tables. It’s like someone arranging their bookshelf to look like a library catalog – it looks organized, but you need to know the system to find what you’re looking for.

Article lists pop up everywhere – news sites, blogs, research portals. We’re usually hunting for titles, dates, and summaries. The good news is that most articles follow similar patterns, making them relatively friendly targets for list crawling.

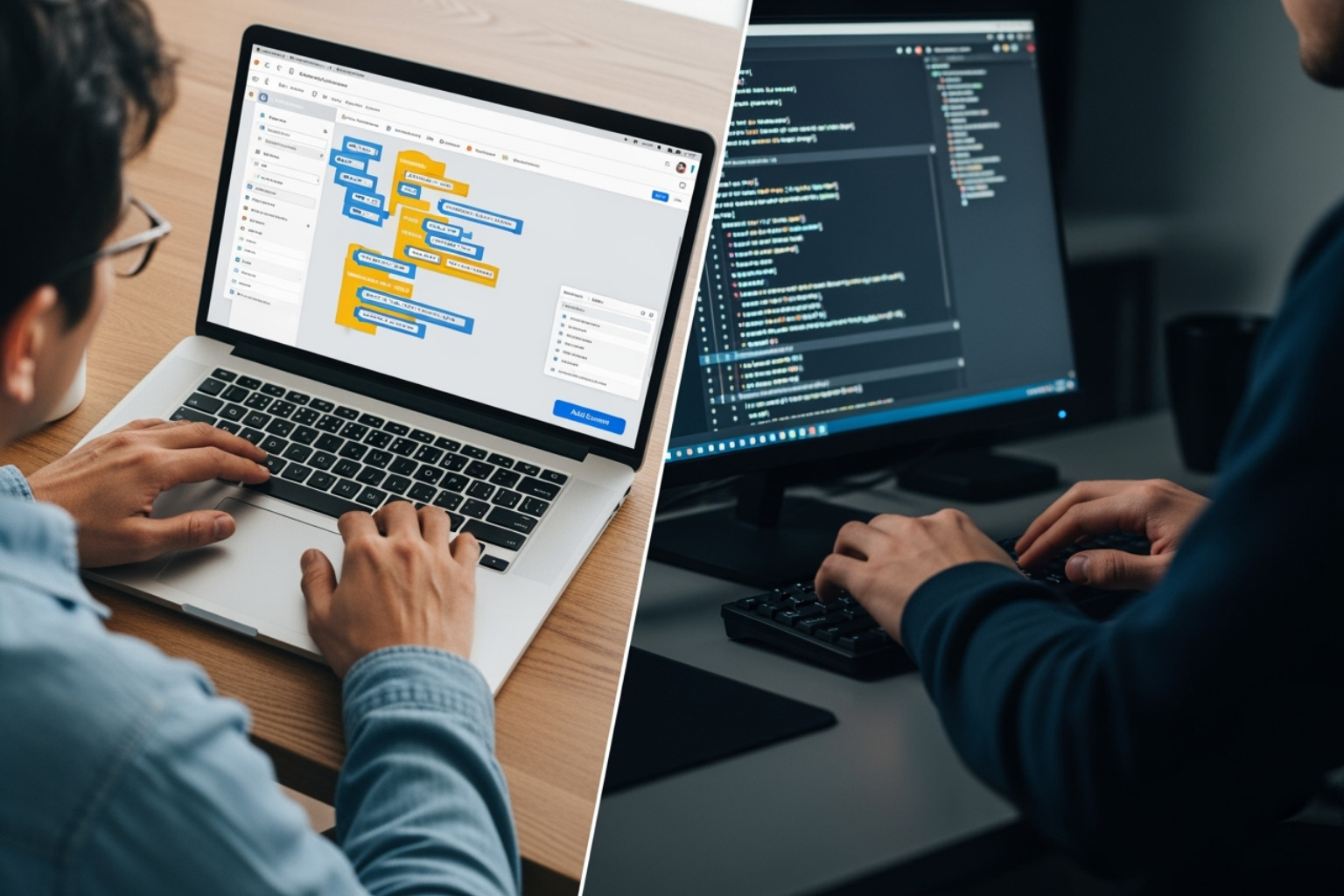

Choosing Your Toolkit: Code vs. No-Code Solutions

Here’s where things get interesting. Your choice of tools really comes down to whether you want to build from scratch or use something ready-made.

Code-based solutions are like having a custom-built race car. If you know Python (or are willing to learn), you get incredible control and flexibility. The requests library handles the heavy lifting of fetching web pages, while Beautiful Soup becomes your best friend for parsing through HTML. Think of Beautiful Soup as your digital detective – it knows exactly how to find the clues you’re looking for in the messy world of HTML code. You can dive deeper into its capabilities through the Beautiful Soup documentation.

For serious list crawling projects, Scrapy steps up as the professional-grade framework. It’s like upgrading from a bicycle to a motorcycle – suddenly you can handle much bigger jobs with features like automatic request scheduling and built-in data processing.

No-code scraping software is perfect for those who prefer point-and-click simplicity. Visual scrapers and browser extensions let you select data elements right on the webpage – no coding required. It’s like using a highlighter on a printed page, except the computer remembers exactly what you highlighted and can do it again on thousands of similar pages.

The trade-off? Code gives you more power and flexibility, while no-code tools get you started faster but might hit walls with really complex websites.

The Future of Extraction: AI and Machine Learning

The exciting news is that list crawling is getting smarter thanks to artificial intelligence. We’re moving toward a future where crawlers can think more like humans.

AI-powered parsing means crawlers that understand context, not just code. Imagine a system that recognizes a product list even when the website completely changes its design. That’s the kind of smart adaptation we’re heading toward.

Automated pattern recognition is already reducing the tedious setup work. Instead of manually defining exactly where to find each piece of data, machine learning algorithms can spot patterns and figure out the structure themselves. It’s like having an assistant who learns your preferences and starts anticipating what you need.

Natural Language Processing is opening up new possibilities for extracting meaning from unstructured text within lists. Whether it’s analyzing product reviews or categorizing article topics, NLP can turn messy text into organized insights.

The ultimate goal? Reduced manual configuration that lets crawlers work more independently. We’re seeing early signs of this with systems that can intelligently steer websites and extract data with minimal human guidance.

For more insights into how technology is reshaping our digital landscape, check out our guide on Future Car Innovations. The same innovative spirit driving automotive tech is revolutionizing how we collect and use web data.

Best Practices, Challenges, and Ethical Guidelines

Let’s be honest – list crawling isn’t always smooth sailing. It’s a bit like trying to gather information at a crowded party where some hosts are friendly and welcoming, while others have bouncers at the door checking IDs. The web has become increasingly protective of its data, and for good reason. But with the right approach, we can still gather the information we need while being respectful guests.

Overcoming Key Challenges in List Crawling

Think of modern websites as sophisticated security systems – they’ve gotten really good at spotting automated visitors. But don’t worry, every challenge has a solution.

Dynamic websites are probably the trickiest obstacle we’ll face. These sites love to load content with JavaScript, which means our simple HTTP requests might come back empty-handed. The solution? We bring out the big guns – headless browsers like Selenium or Puppeteer. These tools actually render the page like a real browser would, waiting for all that JavaScript magic to happen before we extract our data.

IP blocks and CAPTCHAs are the web’s way of saying “slow down there, partner.” When websites detect too many requests from the same IP address, they’ll either block us entirely or throw up those annoying “prove you’re human” puzzles. This is where our strategy of using proxies and rotating user-agents becomes essential.

Changing website layouts can break our crawlers faster than you can say “website update.” One day your crawler is humming along perfectly, and the next day it’s collecting garbage because the site redesigned their product pages. The trick is building flexible crawlers that use general selectors and monitoring them regularly so we can adapt quickly when changes happen.

Rate limiting is actually a good thing – it means the website cares about staying online for everyone. We respect this by adding delays between our requests, typically 1-3 seconds, to mimic how a real person might browse. If we get error messages like “429 Too Many Requests,” we back off even more, sometimes doubling our wait time until things cool down.

Error handling separates the pros from the beginners. Networks hiccup, servers get overwhelmed, and sometimes pages just don’t load correctly. Good list crawling means expecting these problems and having backup plans ready.

When we’re dealing with massive sites – imagine trying to crawl all of IMDb’s 130 million pages – we’re looking at serious scale. A single crawler running at normal speed would take about six months just to visit every page once! For projects this big, we need to think about distributing the work across multiple machines and managing enormous amounts of storage.

The Role of Proxies and User-Agents

Here’s where list crawling gets a bit like playing digital dress-up. Websites identify us by our IP address and something called a user-agent string – basically our digital fingerprint.

IP rotation through proxies is like having multiple addresses. Instead of all our requests coming from the same location, proxies let us route traffic through different IP addresses around the world. This helps us avoid getting blocked and can even bypass geographic restrictions that might hide certain content based on location.

User-agent rotation is about changing our digital identity. Every browser sends a little introduction when it visits a website – “I’m Chrome version 118 on Windows” or “I’m Firefox on Mac.” By rotating these identifiers, we can blend in with regular traffic instead of screaming “I’m a bot!”

The combination of these techniques helps us maintain anonymity and security while gathering data. It’s not about being sneaky – it’s about being a polite, distributed presence rather than a single overwhelming force.

For those curious about the broader landscape of automated web traffic, resources like the list of crawlers IP addresses show just how much automated activity happens online – over 1.4 million categorized IP addresses are tracked in databases like Udger’s.

Legal and Ethical Considerations for List Crawling

Now for the serious talk – because responsible list crawling isn’t just about technical skills, it’s about being a good digital citizen.

Respecting robots.txt is like knocking before entering someone’s house. Most websites have a robots.txt file that acts like a “Do Not Disturb” sign for certain areas. When we see instructions in this file, we follow them. Tools like Scrapy even have built-in settings (like ROBOTSTXT_OBEY) that automatically respect these rules. You can learn more about this standard at the robots.txt documentation.

Website Terms of Service are the house rules. Before we start any crawling project, we read through the website’s terms carefully. Some sites explicitly say “no automated access allowed,” and while the legal enforceability varies by jurisdiction, it’s a clear signal of the website owner’s preferences.

Data privacy laws like GDPR in Europe and CCPA in California have changed the game entirely. We need to be extra careful about collecting any personal information, and we absolutely avoid scraping copyrighted or private data – that’s clearly illegal territory.

Avoiding server overload is just common courtesy. Imagine if someone knocked on your door 100 times per minute – you’d probably stop answering pretty quickly! We configure our crawlers with reasonable delays and limits to ensure we’re not disrupting the website’s normal operations.

The golden rule of ethical list crawling is simple: gather the data you need without causing harm to others. It’s about finding that sweet spot where we get valuable insights while respecting the digital ecosystem we’re all part of.

Real-World Applications and How List Crawling Improves SEO

When we talk about list crawling, we’re not just discussing some abstract technical concept. This powerful technique is actively changing how businesses operate and compete in today’s data-driven world. It’s the difference between spending hours manually copying competitor prices and having that information updated automatically every morning with your coffee.

Practical Business Use Cases

The beauty of list crawling lies in its versatility. Whether you’re running a small online store or managing a large corporation, there’s likely a way this technique can make your life easier and your business more competitive.

E-commerce price tracking represents one of the most immediate applications. Imagine you’re selling electronics online, and you want to stay competitive. Instead of manually checking dozens of competitor websites daily, list crawling can automatically monitor their prices, product availability, and even customer reviews. This real-time intelligence lets you adjust your pricing strategy instantly, ensuring you never lose a sale to a competitor who dropped their prices overnight.

Generating sales leads becomes remarkably efficient with the right approach. Sales teams can crawl business directories, professional networking platforms, and industry-specific websites to build comprehensive prospect lists. The crawler gathers contact information, company details, and even recent news about potential clients. What used to take weeks of manual research now happens in hours.

Content aggregation serves news outlets, bloggers, and researchers who need to stay on top of industry trends. Rather than manually visiting hundreds of websites, list crawling can collect articles, press releases, and announcements from relevant sources. This creates a personalized news feed that ensures nothing important slips through the cracks.

The automotive industry particularly benefits from this approach. When researching the best cars for outdoor adventures, list crawling can extract specifications, reviews, and pricing information from multiple automotive websites. This comprehensive data collection enables detailed comparisons that would be nearly impossible to compile manually.

Market analysis through customer reviews provides invaluable insights into consumer sentiment. By crawling review sites and e-commerce platforms, businesses can track what customers are saying about their products versus competitors. This feedback loop helps identify strengths to emphasize and weaknesses to address.

Boosting Your SEO Strategy

Here’s where list crawling becomes truly exciting for website owners and digital marketers. It’s like having a tireless assistant who never sleeps, constantly monitoring your website’s health and your competitors’ strategies.

Website monitoring through list crawling acts as your site’s health check system. The crawler systematically examines every page, identifying broken links that frustrate visitors and hurt search engine rankings. It spots duplicate content that might confuse search engines and flags technical errors before they impact your site’s performance. This proactive approach keeps your website running smoothly and maintains good relationships with search engines.

Backlink analysis reveals the hidden connections that boost search rankings. By crawling competitor websites and industry resources, you can find who’s linking to your competitors and why. This intelligence helps identify potential link-building opportunities and understand what content attracts valuable backlinks in your industry.

Keyword research and SERP tracking through list crawling provides the competitive intelligence that drives smart SEO decisions. You can monitor how your target keywords perform across different search engines and locations, track competitor rankings, and identify emerging keyword opportunities before they become highly competitive.

The relationship between crawling, indexing, and ranking is fundamental to SEO success. Search engines must first crawl your pages to find them, then index the content, and finally rank them in search results. List crawling helps ensure this process works smoothly by identifying pages that search engines might miss or struggle to access.

When your website has excellent crawlability, search engines can easily find and understand your content. This foundation supports better indexing, which ultimately leads to improved rankings. List crawling provides the data needed to optimize this entire process, from identifying technical barriers to understanding how search engines interact with your site.

This comprehensive approach to data collection and analysis transforms raw web information into actionable business intelligence, whether you’re optimizing your website’s performance or researching market opportunities.

Frequently Asked Questions about List Crawling

We get tons of questions about list crawling from folks just starting their data collection journey. These are the ones that come up most often, and we’re happy to share what we’ve learned along the way.

What is the main difference between list crawling and general web scraping?

Think of list crawling as the precision tool in your data collection toolkit. While general web scraping is like using a wide net to catch anything and everything from a website, list crawling is more like using a specialized fishing rod to target specific fish.

List crawling focuses exclusively on extracting structured, repetitive data from lists – things like product catalogs, business directories, or search results. These are collections where each item follows a similar pattern: name, price, description, contact info, and so on.

General web scraping, on the other hand, can target any type of information scattered across a website. It might grab random paragraphs, images, or even entire page layouts. The key difference? List crawling is all about finding patterns and consistency, while general scraping can handle the messy, unstructured stuff too.

Here’s a simple way to think about it: if you’re collecting restaurant names, addresses, and phone numbers from a directory, that’s list crawling. If you’re grabbing random blog posts, images, and comments from various pages, that’s general web scraping.

How can I avoid being blocked while crawling a list?

Getting blocked is probably the biggest headache for anyone doing list crawling. The good news? There are proven ways to fly under the radar and keep your crawlers running smoothly.

Rotate your IP address using proxies – this makes your requests look like they’re coming from different people in different locations. Websites often block based on IP addresses, so mixing things up is crucial.

Switch up your user-agents to mimic different browsers. Your crawler shouldn’t always announce itself as the same browser version. Sometimes it’s Chrome, sometimes Firefox, sometimes Safari – just like real users.

Add natural delays between your requests. Real humans don’t click through pages at lightning speed. Adding 1-3 second pauses between requests makes your crawler look more human and prevents server overload.

Most importantly, respect the robots.txt file. This is like the website’s “house rules” for crawlers. Following these guidelines shows you’re playing fair and significantly reduces your chances of getting blocked.

The golden rule? Don’t be greedy. Slow and steady wins the race when it comes to list crawling.

Is list crawling legal?

This is probably the question we get asked most, and honestly, it’s complicated. The short answer is: it depends on what you’re doing and how you’re doing it.

List crawling of publicly available data is generally legal. If information is visible to anyone visiting a website without logging in, you’re usually in the clear. Think of it like reading a newspaper in a coffee shop – the information is public.

However, you need to play by the rules. Always check the website’s Terms of Service before you start crawling. Some sites explicitly prohibit automated data collection, and violating these terms can get you in trouble.

Data privacy laws like GDPR and CCPA add another layer of complexity. If you’re collecting personal information, you need to handle it responsibly and legally. Scraping someone’s private social media posts or personal emails? That’s definitely off-limits.

Never cause harm to the website you’re crawling. Overloading servers, bypassing security measures, or scraping copyrighted content can land you in legal hot water.

The bottom line? Stick to public data, respect website rules, don’t overwhelm servers, and always prioritize ethical practices. When in doubt, consult with a legal expert who understands data collection laws.

Conclusion

Our journey through the fascinating world of list crawling has taken us from basic definitions to advanced techniques, revealing how this specialized approach to web scraping has become essential for modern businesses and researchers. We’ve finded that it’s far more than just a technical tool—it’s a strategic advantage in our data-driven world.

The benefits we’ve explored paint a clear picture of why list crawling matters so much. Time-saving automation replaces hours of manual copying and pasting with minutes of efficient extraction. Cost-effective solutions help businesses gather competitive intelligence without hiring large research teams. Improved accuracy ensures the data we collect is reliable and actionable, whether we’re tracking competitor prices, building sales leads, or analyzing market trends.

What makes list crawling particularly powerful is its versatility across industries. E-commerce businesses use it to monitor pricing strategies. Marketing teams build comprehensive lead databases from business directories. SEO professionals identify broken links and analyze competitor backlinks. Content creators aggregate information from multiple sources to provide better insights to their audiences.

The technical challenges we’ve discussed—from dynamic websites to IP blocks—might seem daunting at first. However, with the right approach using proxies, user-agent rotation, and respectful crawling practices, these obstacles become manageable stepping stones rather than roadblocks. The key is balancing efficiency with responsibility, ensuring we gather valuable data without overwhelming target websites or violating ethical guidelines.

Looking ahead, the integration of AI and Machine Learning promises to make list crawling even more intelligent and autonomous. Automated pattern recognition and natural language processing will reduce manual configuration while improving data quality. This evolution means businesses of all sizes will have access to powerful data collection capabilities that were once available only to large corporations.

The legal and ethical considerations we’ve covered aren’t just compliance checkboxes—they’re the foundation of sustainable data practices. By respecting robots.txt files, following website terms of service, and adhering to privacy laws like GDPR and CCPA, we ensure our list crawling efforts remain both effective and responsible.

As we’ve seen throughout this guide, list crawling empowers businesses and researchers to make data-driven decisions with confidence. Whether you’re just starting your journey into web data extraction or looking to optimize existing processes, the techniques and best practices we’ve covered provide a solid foundation for success.

Just as we at Car News 4 You strive to deliver comprehensive, practical guides across various topics, mastering list crawling opens doors to deeper insights and smarter business decisions. To see how data analysis principles apply in the automotive world, explore our guide to the best adventure-ready vehicles.